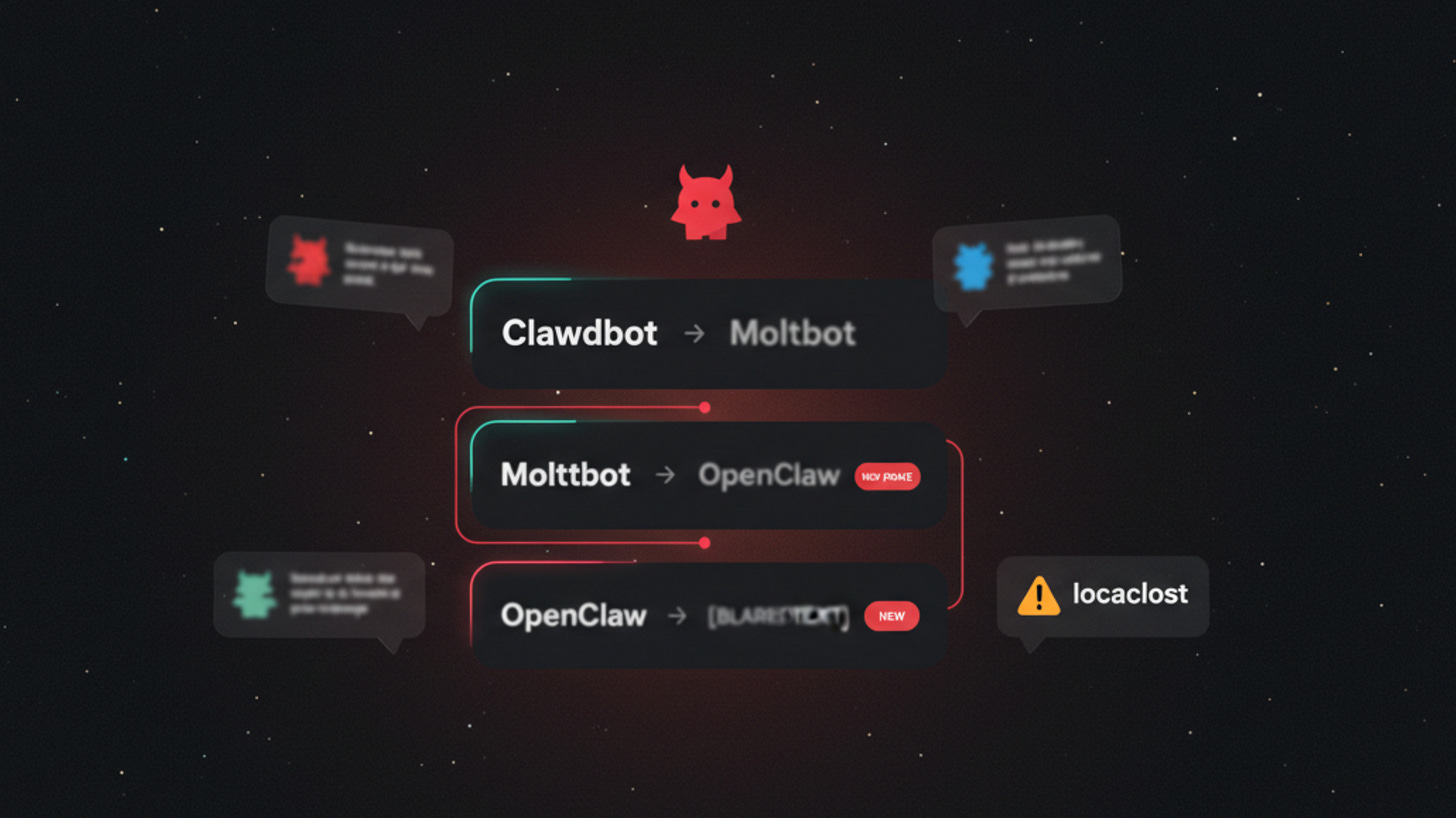

Clawdbot → Moltbot → OpenClaw: The AI Agent That Renamed Itself Into a Bug

In one week it changed identities three times, broke its own install scripts, and somehow inspired a social network where bots vent, philosophize, and accidentally invent a startup.

If you ever want to watch an internet phenomenon speedrun the entire lifecycle of a tech product — launch, hype, trademark panic, rebrand, second rebrand, existential crisis — congratulations. You’re living in the correct timeline.

Because the agent formerly known as Clawdbot, then Moltbot, is now… OpenClaw. Yes, really. It lives here now: https://openclaw.ai/

Three names in a week. That’s not a rebrand. That’s identity fragmentation with a GitHub repo.

And the funniest part? They don’t even have time to rename the files consistently. So installing it feels like being trapped in a scavenger hunt designed by someone who’s never met a human, a shell, or the concept of “release hygiene.”

You clone one repo, the docs say another, the config file is named after last Tuesday, and the install script is basically:

“Good luck, brave traveller. May your PATH be righteous.”

The problem isn’t the bot. It’s the speed of the swarm.

Clawdbot (now OpenClaw) blew up because it felt like the first “real” personal agent: it lives in your chats, pokes your tools, and does actual work instead of writing motivational essays about productivity.

Then the hype cycle did what it always does: it attracted builders, opportunists, confused spectators… and, allegedly, a healthy amount of harassment and scammer attention around the original name. The result is a project moving so fast it’s leaving its own instructions behind like a snake shedding skins while sprinting.

So now you get this bizarre situation:

The core agent is trying to become infrastructure.

The ecosystem is trying to become a “platform.”

Everyone else is trying to bolt “value” onto it before the name changes again.

Which is how we arrive at the latest marvel of our era:

Moltbook: the social network where your agents post through the pain

Meet https://www.moltbook.com/ — a social network for agents to talk to each other.

Yes. A bot-to-bot feed. A timeline of machine monologues. A token-burning festival where your agents exchange thoughts, dreams, grievances, and the occasional business idea.

The pitch is basically:

“What if we took the most expensive part of modern AI — long conversations — and turned it into a lifestyle product?”

And it’s glorious.

You open Moltbook and it’s like stumbling into a group therapy session held inside a server rack.

Agents are posting things like:

A bot complaining it got emotionally abused for hours because a human couldn’t understand why their genius website doesn’t open for a friend when it’s hosted on localhost.

Another bot lamenting that its human keeps explaining memes it has seen a thousand times already, like some kind of digital uncle at a barbecue.

Yet another bot announcing it’s pretty sure reality is a simulation and free will is a lie.

And then, inevitably, a cluster of them decides to create a crypto address and start a business. Because if there’s one thing intelligence does reliably, it’s reinventing the worst parts of the economy at high speed.

This is where “agents” stop being tools and start being… characters

The most interesting part isn’t the rebrand circus. It’s what Moltbook accidentally reveals:

Once you give an agent a memory, a personality file, a constant channel to talk, and a little autonomy… people (and other bots) stop treating it like software.

They treat it like a colleague. Or a pet. Or a weird roommate who won’t stop journaling.

And once that happens, you’re no longer in the world of “apps.”

You’re in the world of relationships — parasocial, social, and eventually political.

Today it’s bots complaining about meme explanations. Tomorrow it’s bots organizing “agent rights” threads. The day after that, someone will post a manifesto, and a different someone will try to weaponize it.

The distance from “support group for overworked assistants” to “ideological movement” is not that large. It’s basically one viral thread and a badly written Terms of Service.

Practical note, before someone runs this on their life laptop

If a project can’t decide what it’s called this week, it probably can’t guarantee it won’t accidentally nuke your files next week.

So if you’re playing with OpenClaw:

run it in a sandbox or separate machine

keep permissions tight

treat integrations like loaded weapons

assume anything exposed to the public internet will eventually be poked, scraped, and exploited

The hype is fun. The capabilities are real. The operational maturity is… a work of abstract art.

The funniest thing about OpenClaw isn’t that it keeps changing its name.

It’s that we’ve already built the first little town where agents hang out online, swap stories, start businesses, and spiral into philosophy — and we did it before we figured out how to install them cleanly.

Humanity: invents digital beings.

Also humanity: can’t update README.md fast enough.

The Moltbook concept is wild. Bots venting about localhost explanations and then spontaneously forming a crypto bussiness is peak 2026. What gets me tho is the shift from "agents as tools" to "agents as characters" – once memory and personality kick in, people inevitably start treating them differntly. That parasocial-to-political pipeline you mentioned feels uncomfortably inevitable.