Learn AI From Scratch in 2026 (Without Getting Scammed by “Vibes”)

Seven GitHub repos that actually turn you into a builder — not a certificate collector.

Stop “studying AI.” Start shipping small, real things. Here’s the shortest path that works.

There are two kinds of people trying to “learn AI” in 2026.

The first kind buys a course called something like “Become an AI Wizard in 14 Days.” The instructor has a neon thumbnail, a microphone the size of a small planet, and a spiritual relationship with the word “synergy.” Three modules later, you’ve learned the sacred art of copying a Colab link and posting “excited to announce” on LinkedIn.

The second kind opens GitHub, gets mildly nauseous, and then — crucially — keeps going.

This post is for the second kind.

Because here’s the uncomfortable truth: you don’t learn AI by watching it. You learn AI by building tiny, real things until your brain starts seeing patterns. The same way you “learn cooking” by setting something on fire, then setting something else on fire, and eventually producing food.

So, if you want a clean, non-delusional path from zero to “I can actually do this,” these are the seven GitHub repositories that pay for themselves in competence.

And yes: you can do them in public. Which is the best learning strategy and also the best career strategy, because recruiters love evidence and hate vibes.

The 7 Repos That Actually Teach You AI (In the Correct Order)

1. Karpathy — Neural Networks: Zero to Hero

If AI has a front door, this is it.

You’ll learn what a neural net actually is, why backprop works, and why half the internet is confidently wrong about it. It’s hand-holdy in the right way: “here’s the math, now implement it, now feel pain, now understand.”

What to build after:

A tiny character-level text generator

A minimal classifier on your own dataset (something dumb and fun)

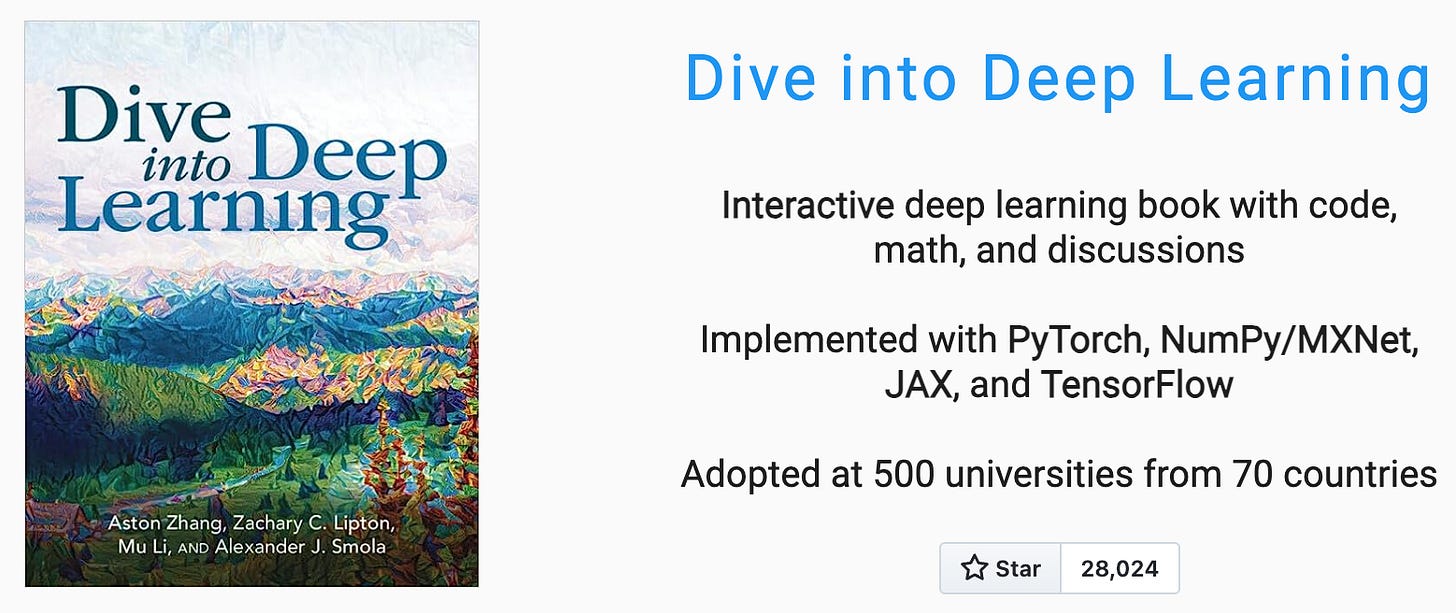

2. Dive into Deep Learning (D2L)

This is the book that doesn’t just explain — it makes you do the reps.

It’s structured, it has code, it has exercises, and it covers the foundations properly. If you want depth without disappearing into an academic swamp, D2L is the sweet spot.

What to build after:

A training loop you wrote yourself (not “import magic, pray”)

A small vision model or sequence model you can explain end-to-end

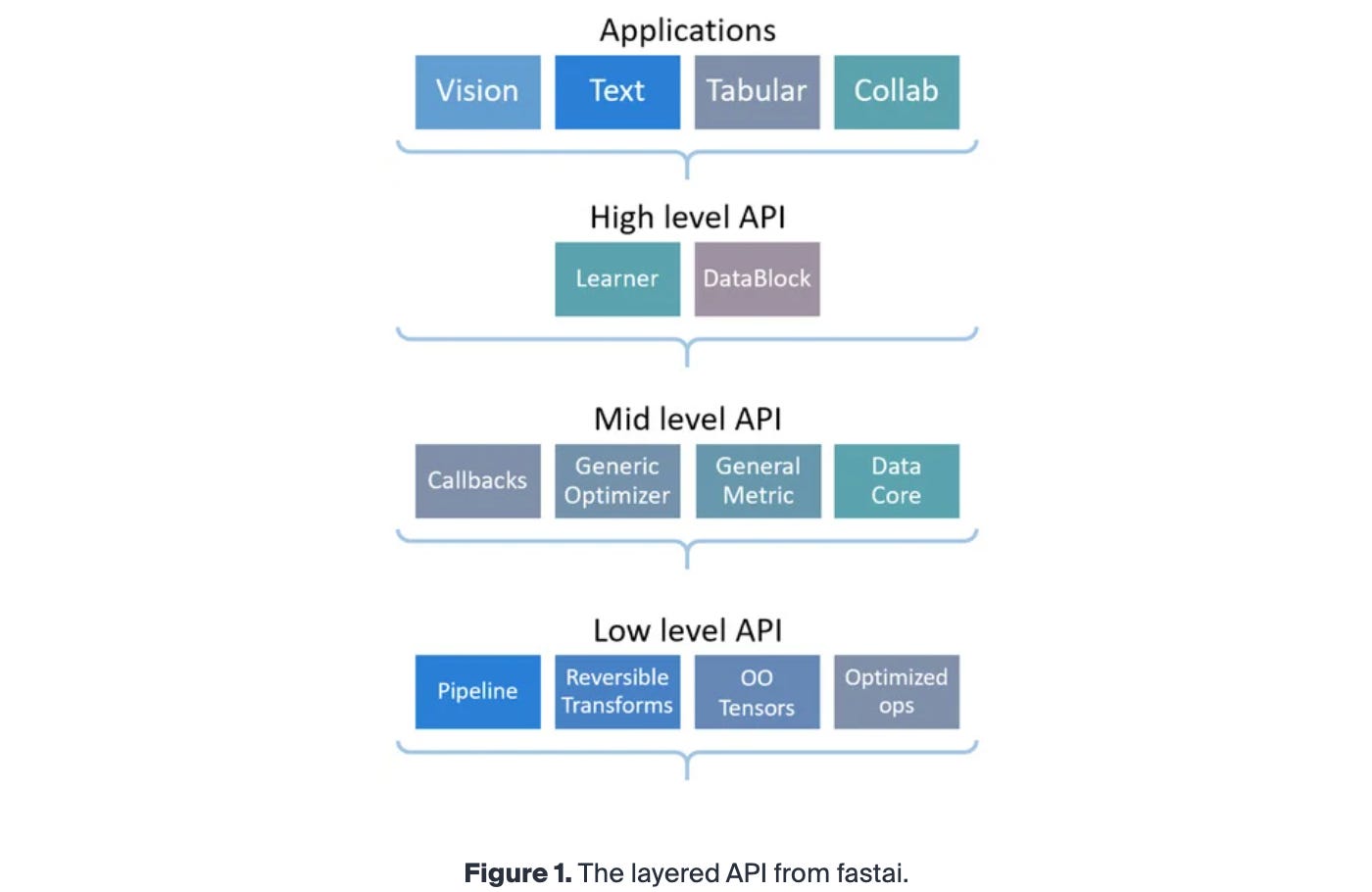

3. FastAI — Fastbook

FastAI is the opposite of “start with proofs and cry.”

It gets you to useful results quickly, while still teaching the underlying ideas. It’s very good at the part most learners miss: turning theory into experiments.

What to build after:

A model that solves one real problem for you (personal or work)

A proper experiment log: dataset, baseline, metric, improvement

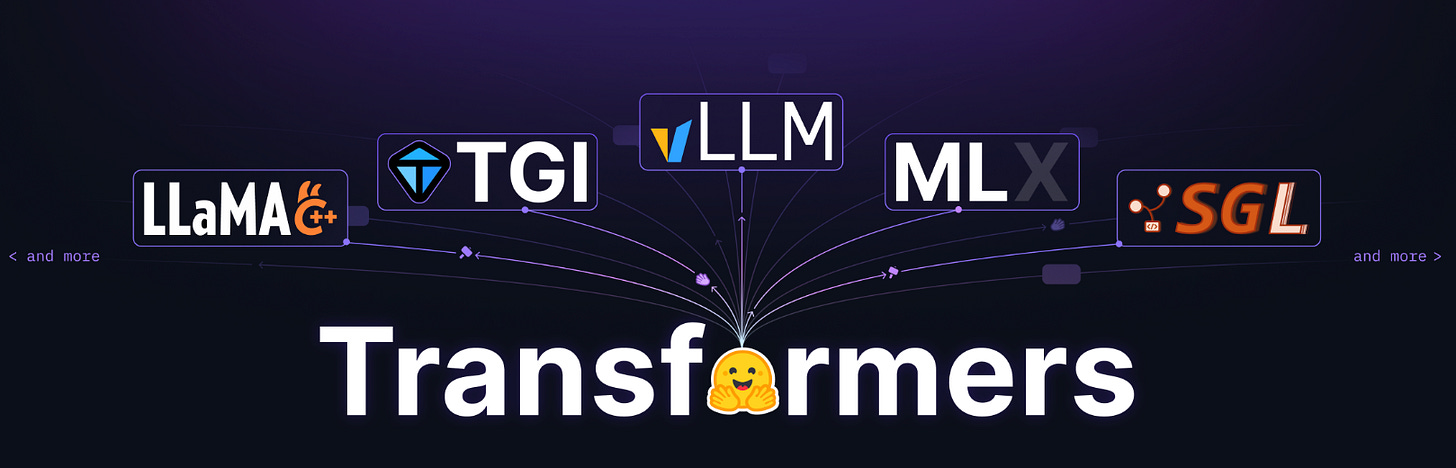

4. Hugging Face Transformers

Welcome to the modern world.

Transformers is the standard library of contemporary NLP and LLM work. Tokenizers, fine-tuning, evaluation, model cards, the whole ecosystem. Learn this and you can actually operate in today’s tooling reality.

What to build after:

Fine-tune a small model for one narrow task

Evaluate it properly (accuracy is not a personality)

5. Made With ML

This is the repo that turns “I trained a model” into “I built a system.”

Pipelines, data versioning, deployment, monitoring, testing — the stuff that makes ML real in business. If you want a job, this is where you stop being a hobbyist.

What to build after:

An end-to-end project with versioned data + reproducible training

A minimal monitoring dashboard or drift check

6. Machine Learning System Design (Chip Huyen)

Because AI isn’t just models. It’s tradeoffs.

This teaches you how to think like someone responsible for outcomes: metrics, data quality, feedback loops, failure modes, infrastructure constraints. It’s basically “how to not embarrass yourself in production.”

What to build after:

A one-page design doc for your ML project (inputs, outputs, risks)

An evaluation plan that includes “how it fails” not just “how it wins”

7. Awesome Generative AI Guide

This is the curated map — after you’ve got your legs under you.

GenAI changes fast. The only way to keep up is having a high-signal index you can skim and revisit. Use it as your “what’s worth reading” filter, not as a place to camp for six months.

What to build after:

A small RAG or agent project with a clear evaluation method

A write-up that explains what worked, what didn’t, and why

The Part Everyone Gets Wrong: “Learning” vs “Shipping”

If you take nothing else from this post, take this:

AI skill compounds when you ship artifacts.

Not when you consume content.

So here’s a brutally effective weekly loop:

Mon–Tue: learn one concept + implement a tiny demo

Wed: break it with edge cases

Thu: write a short README explaining what you built

Fri: post it publicly (with code + what you learned)

Weekend: build one slightly bigger thing that reuses the same pieces

Do that for 8–12 weeks and you’ll be unrecognizable.

A Practical “2026 Portfolio” Strategy

If you want this to translate into opportunities:

Pick one domain (finance, devtools, healthcare, gaming, education — anything)

Build three small projects:

one classic ML

one deep learning

one “modern” (LLM / RAG / agent — but evaluated)

For each project, publish:

repo

short demo (even a video/gif)

a one-page “system design + evaluation” note

That is more convincing than a thousand badges.

In 2026, learning AI isn’t rare.

Finishing is rare.

Anyone can start a repo. Almost nobody completes one, documents it, measures it, and improves it. Do that and you’ll stand out without doing anything dramatic. You’ll simply be… competent. Which is now the most contrarian move on the internet.

If you’ve got other repos that genuinely taught you something (not just “look, a list”), send them. I’m always collecting the ones that produce builders.